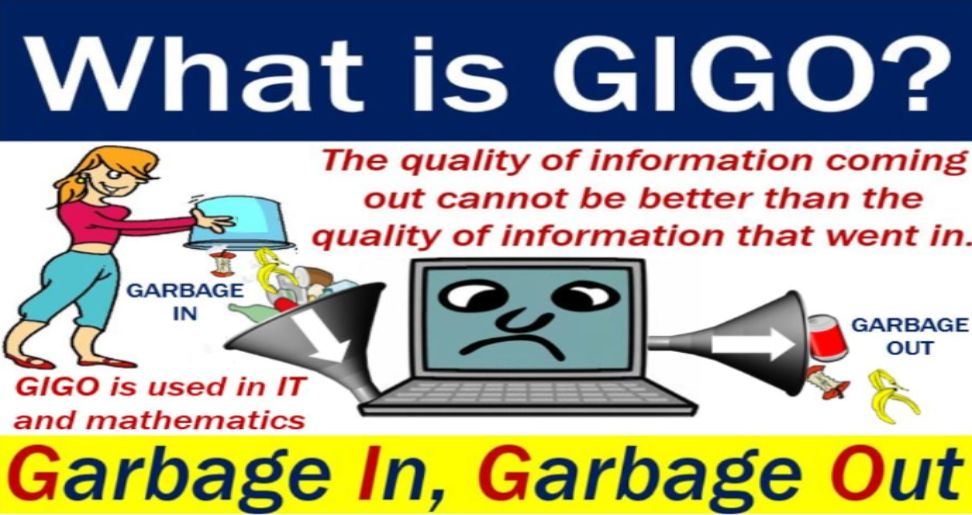

The acronym “GIGO” stands for “Garbage In, Garbage Out.” It’s a fundamental concept in computer science and data processing that emphasizes the importance of data quality in producing accurate and meaningful results. In essence, GIGO states that if you input low-quality, inaccurate, or incomplete data into a system, you can expect to receive low-quality, inaccurate, or incomplete output.

GIGO – short history

The acronym “GIGO” stands for “Garbage In, Garbage Out,” a principle in computing that emphasizes the importance of data quality in producing accurate results. While the exact origin of the phrase is unclear, it’s likely rooted in the early days of computing when data was often entered manually and prone to errors. As computers became more complex and capable of processing vast amounts of information, the realization that inaccurate or incomplete data would inevitably lead to inaccurate or useless output became increasingly apparent. This concept has remained a fundamental principle in computer science and data management, underscoring the critical need for data integrity and quality control in ensuring reliable and meaningful results.

Why GIGO Matters

The GIGO principle is crucial for several reasons:

- Data Integrity: Data integrity refers to the accuracy, consistency, and reliability of data throughout its lifecycle. It ensures that data remains accurate, complete, and free from errors or inconsistencies. This is crucial for making informed decisions, maintaining data quality, and preventing data breaches. Data integrity is achieved through various measures, including data validation, error checking, data cleansing, and proper data governance. By prioritizing data integrity, organizations can safeguard their valuable assets, enhance operational efficiency, and build trust with stakeholders

- Decision Making: Decision making is a complex cognitive process that involves evaluating information, considering alternatives, and selecting a course of action. It’s a fundamental aspect of human behavior, influencing everything from personal choices to organizational strategies. Effective decision making requires a combination of critical thinking, problem-solving skills, and emotional intelligence. While there are various decision-making models and approaches, the common goal is to make informed and rational choices that align with individual or organizational objectives. Factors such as risk tolerance, time constraints, and available resources often play significant roles in the decision-making process.

- System Efficiency: System efficiency refers to the ability of a system to maximize output while minimizing input. In simpler terms, it measures how well a system uses resources to achieve its intended goals. A highly efficient system operates smoothly, minimizes waste, and produces optimal results. Efficiency is a critical factor in various fields, including engineering, manufacturing, economics, and technology. By optimizing efficiency, organizations can reduce costs, improve productivity, and enhance overall performance.

- Trust and Reputation: Trust and reputation are two interconnected concepts that are fundamental to the success of individuals and organizations. Trust is the belief that someone or something is reliable, honest, and competent. It is the foundation upon which relationships are built and maintained. Reputation, on the other hand, is the public perception of a person or organization. It is the collective opinion formed based on past actions, behaviors, and interactions. A positive reputation is essential for building trust, as it demonstrates reliability, integrity, and credibility. Conversely, a negative reputation can erode trust and damage relationships. Trust and reputation are interdependent; a strong reputation fosters trust, while trust reinforces a positive reputation. Ultimately, both trust and reputation are essential for building successful relationships, fostering collaboration, and achieving long-term goals.

Common Causes of GIGO

GIGO can be caused by a variety of factors, including:

- Data Entry Errors: Data entry errors, a common occurrence in any organization that relies on manual data input, can significantly impact the accuracy and reliability of information. These errors can arise from various factors, such as typos, misinterpretations, or incorrect data formatting. Common examples include entering incorrect numbers, transposing digits, or omitting essential information. The consequences of data entry errors can be far-reaching, leading to inaccurate reports, faulty decision-making, and financial losses. To mitigate the risk of data entry errors, organizations often implement measures like double-entry systems, data validation checks, and employee training to enhance accuracy and minimize the negative impact on operations.

- Data Quality Issues: Data quality is a critical aspect of any data-driven initiative. Unfortunately, data collected from various sources often suffers from numerous quality issues that can significantly impact the accuracy and reliability of analysis and decision-making. Common data quality problems include incompleteness, where data is missing essential attributes or values; inaccuracy, where data contains errors or inconsistencies; inconsistency, where data is represented in different formats or standards; redundancy, where the same data is duplicated across multiple sources; timeliness, where data is outdated or not available in a timely manner; and validity, where data does not conform to predefined rules or constraints. These issues can arise due to various factors such as data entry errors, system failures, data integration challenges, and changes in data sources or definitions. Addressing data quality issues requires a comprehensive approach involving data cleaning, validation, standardization, and ongoing monitoring to ensure the integrity and trustworthiness of the data used for analysis and decision-making.

- System Limitations: While the system offers a robust platform for various tasks, it’s important to acknowledge certain inherent limitations. The system’s performance can be influenced by factors such as network connectivity, hardware specifications, and the complexity of the tasks being performed. Additionally, the system’s ability to process and understand information is dependent on the quality and quantity of data it has been trained on. There may be instances where the system struggles to provide accurate or relevant responses, particularly when faced with ambiguous or highly specialized queries. Furthermore, the system is susceptible to biases present in the data it was trained on, which can lead to unintended discriminatory outcomes.

Preventing GIGO

To prevent GIGO, organizations should implement data quality management practices, including:

- Data Cleaning: Data Cleaning is a crucial preprocessing step in data analysis and machine learning. It involves identifying and correcting errors, inconsistencies, or missing values within a dataset. This process ensures data quality and reliability, which is essential for accurate modeling and meaningful insights. Common data cleaning techniques include:

- Handling Missing Values: Replacing missing values with appropriate values (e.g., mean, median, mode) or removing rows/columns with excessive missing data.

- Detecting and Correcting Outliers: Identifying and removing or correcting extreme values that deviate significantly from the majority of the data.

- Addressing Inconsistent Data: Standardizing data formats, units, and values to ensure consistency across the dataset.

- Removing Duplicates: Identifying and removing duplicate records to avoid redundancy and bias in analysis.

- Data Imputation: Filling in missing values using statistical methods or machine learning techniques.

By effectively cleaning the data, analysts can improve the accuracy and reliability of their models, leading to more meaningful and actionable results.

- Data Validation: Implementing rules and checks to ensure that data meets specific criteria.

- Data Standardization: Establishing consistent data formats and definitions.

- Data Governance: Data Governance is a comprehensive set of policies, procedures, and practices designed to ensure the effective management of an organization’s data assets. It involves establishing and maintaining control over data quality, accessibility, security, and integrity throughout its lifecycle. Data governance frameworks typically include elements such as data classification, data quality management, data security measures, data retention policies, and data usage guidelines. By implementing robust data governance practices, organizations can improve decision-making, enhance operational efficiency, mitigate risks, and comply with regulatory requirements.

Read More :

Featured Image Source: https://tinyurl.com/m68rwm7m